See question 16.

Absolutely. See question 1.

Same question as question 8.

I am not sure what you are asking. What do you you mean with “a high-level test strategy for all testers?” And I am a bit confused by the second part: “specifically around the context of what you are testing?” Any test strategy is depending on context, so what kind of strategy are you thinking of not depending on any context? Maybe you are talking about a way of working?

I think of my testing strategy on different levels and from different perspectives all the time. See question 16. I can imagine that we think of testing on a high-level at the beginning of a project. Like performance is important, so we need to do performance testing at some time, so let’s make sure we have the right tooling and environment ready.

Any test strategy that stays high-level is not something I would value very much. A strategy needs give insight in WHAT you gonna test and HOW, which is pretty specific. I can imagine that when you start to work on a new project or new feature, it takes time to learn about the product. We have to deal with not knowing many details yet. This knowledge will grow and we will learn the necessary product details.

Interesting question. What do you mean by not working?

- Not finding (enough) bugs?

- Bugs found are not important enough?

- Get the wrong information from testing?

- Too expensive?

- Not finished in time?

- Doing too much testing?

- Testing the wrong things

- Using the wrong tools?

- Not done according to company policies?

- Environments are blocked because tests often crash the product?

- Can’t be executed because a lack of skills?

- Stakeholders are not happy with the test strategy?

- …

Often only a part of the strategy is not working. Signs and symptoms depend on our missions (what do your clients, stakeholders or team expect from testing?). I think going through the list above might give you a few ideas.

Also, how do you answer the question: when do you stop testing? When is your product good enough?

James Bach wrote an blogpost “How Much is Enough? Testing as Story-Telling” and an article about “a framework for good enough testing”. Also Michael Bolton wrote an interesting blogpost about “When Do We Stop a Test?”.

I suggest to talk about testing and quality with your team and stakeholders. Do a retrospective every now and then to find out if there are improvement opportunities (which also can mean testing less). Often tell the three part testing story to your team and stakeholders and decide together if your test strategy is good enough. The three part testing story helps you gain insight in:

- the product story

- the testing story

- the story about the quality of testing

I cannot answer this question because I miss the context and I have no clue what kind of product we are talking about here.

Generally speaking I would learn about the product, think about were it might fail, think of ways to find those problems and think of ways to explore the product to find unknown risks. This is the same approach as I described in question 1:

- Missions for your testing

- Product analysis

- Oracles & information sources

- Quality characteristics

- Context: project environment

- Test strategies

I cannot answer this question because I miss the context and I have no clue what kind of product we are talking about here. See my answer for question 22.

See my answer at question 22.

You have to be more specific in your question. I miss the specifics of the context and I have no clue what kind of product we are talking about here.

Follow the general meta approach described earlier but adapt it for the specific context you are in (waterfall, agile or whatever) and the product you are testing.

I am afraid I do not have an example for the context you are in.

I am a bit worried by your question. If your main goal is test automation, you are doing it wrong! Automation cannot be the mission in any test strategy. I am not saying you shouldn’t do automation. I think any project could benefit from automation. But it needs to solve a problem. Automation in testing is never a solution in itself.

I would suggest a couple of heuristics for sustainable automation:

- make automation part of your daily work and a team responsibility

- tread the test code as production code

- have people working on it who have to right skills (automation = programming, what to automate = testing skill)

- think what feedback loops you need (what information you need to collect)

Also have a look at this:

- TRIMS - a mnemonic for valuable automation in testing by Richard Bradshaw

- Which Test Cases Should I Automate? by Michael Bolton

- Discussion here on the Club on “what to automate”.

When I summarise my testing I use the three part testing story. The testing story helps me summarise my knowledge about:

- the product

- the testing I have done

- the quality of my testing

The amount of detail depends on who I am talking to: developers, testers, managers, directors, clients and what they want to know. So I often start my reporting with asking what they would like to know.

Test Strategy is a solution to a complex problem: how do we meet the information needs of our team & stakeholders in the most efficient way possible? A Test Strategy is the start for every tester and helps you to do the right things, gives testing structure and provides insight in risks and coverage.

A Test Strategy is not a document but “a set of ideas that guide the choice of tests to be performed”. (Rapid Software Testing).

Test strategy contains the ideas that guide your testing effort and deals with what to test, and how to do it. It is in the combination of WHAT and HOW you find the real strategy. If you separate the WHAT and the HOW, it becomes general and quite useless. (Rikard Edgren).

I would learn about the product, think about were it might fail, think of ways to find those problems and think of ways to explore the product to find unknown risks.

If you need guidance on how to create a Test Strategy do these activities:

- Missions for your testing

- Product analysis

- Oracles & information sources

- Quality characteristics

- Context: project environment

- Test strategies

Be aware that the activities do not need to be done specifically in this order. Most likely you will do this in an iterative way, building your evolutionary Test Strategy as you go.

Welcome! Thank you for watching and asking your question!

There are many stakeholders for testing and therefore your test strategy, among which are:

- Your team

- Other teams

- Managers (and managers of those managers)

- Clients

- Users

- Maintenance/OPS

- Business departments

- Auditors

Have a look at question 7 on how to get them involved.

Interesting question. I would say that a test strategy should be specific: it takes the context into account and the methodology you are using in your team is part of that context! This means that a Test Strategy cannot be methodology agnostic.

I think this question is answered in question 11.

Not necessarily. A test strategy is not a document but it can be documented. I would suggest not to write a test plan if you do not have to. In many context you do not need a test plan. A test plan is often full of fluff that nobody reads anyway.

You always will have a test strategy, even if you are not aware of it. It is in your head when every you test. Your mental model of what to test and how is a test strategy. As soon as you need to communicate it, it might be handy to document parts of it. I like mind maps and a risk-task list amongst other things to help me talk about my test strategy. These visualisations of the product and my test coverage create insight and overview, to help me determine my next steps.

So I would recommend to talk to your team and other stakeholders about how to communicate your test strategy. You’ll find out what helps you and what not.

Absolutely amazing work here @huib.schoots! Thank you so much for taking the time to answer all of these questions

That is a trick question. A test strategy is a mental model. Not a document. ![]()

I can’t show you the examples of the Test Strategy documents, but I can describe them: I made a 50+ page document called “Master Test Plan (MTP)” describing everything about the test process: summary of project (copied form the project plan), management summary, deliverables, scope, responsibility assignment matrix, workflow, stakeholders, escalation paths, test organisation, stakeholders, conditions, test basis, quality gates, exit criteria, test types, findings process, planning and budget, infrastructure, test environments and 2 or 3 pages on PRA and Test Strategy.

Google “Test Strategy” and the majority of links you will find are like that: templates for documents full of fluff. Or vague and general statements about risks and a test approach.

Back to my bad examples: in that time (around 2002 - 2005) I wrote MTP with vague Test Strategies. It took loads of time and effort to make those documents and not many people would read it. Waste of my valuable time. Some parts of it were useful, but my team did not read the document because it was boring, full of fluff and it lacked what they wanted to know: what and how are we going to test? My document never became very specific on those topics. And, another big mistake: the document never got updated during the project. Once done, it was signed off and put in a drawer. I learned that testing is a team sport. You have to create a test strategy with you team. Get people involved by talking to them instead of sending them documents.

The PRA was a list of quality attributes with a simple calculation (possible impact x chance of failure) and based on the number we assigned a “risk class” (high, medium, low). And based on the risk class we assigned test intensity (heavy, medium or low) testing. This method is still taught to people. Look here to see what it looks like.

What went wrong is that we created a document upfront. It was fixed. While I now know that learning is a process, just like your test strategy: it is a process! It develops over time. We assigned test intensity to risk classes and we even decided upfront which test techniques we would use. Without really knowing what the software would look like. Dangerous assumptions. Now I know that it is important to develop the test strategy while we test. The more we know, the better we are able to think about potential problems. And the better we can decide what we need to do to find those problems.

I also created a PRA (product risks analysis) in a big workshop with lots of people. It took a lot of time and wasn’t valuable enough for the attendees it seemed. The lists we created were good and the discussions created insight. But I also learned that talking to people in smaller groups creates deeper discussions. And talking about risks and my testing over time going into more detail (you could call it evolutionary test design) helped me and the stakeholders to learn faster and create deeper understanding of what was really going on.

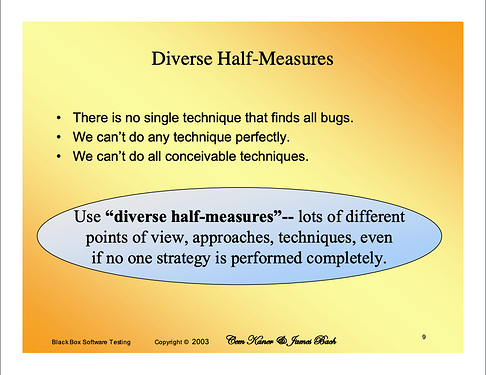

My old approach assumed “the higher the risk, the more important the test to be performed and the more test intensity we need.” Now I know that high risk does not necessarily mean that the tests also need to have more depth or a more formal test design technique should be applied. To find a diversity of problems, we need to use a diverse test strategy, which means a diverse set of approaches, techniques and tactics.

(source: Black Box Software Testing by Cem Kaner & James Bach)

I am not sure what you mean by the words “adhered to all levels”. Level could point to test level or level in the organisation. I assume that you mean: How do I make sure that everybody in the organisation is on the same page".

By talking to people about the testing! By talking to them about stuff they care about. By showing that you are helping them by delivering the information they care about (which is probably not details of the testing you do, but is a story about risks and quality). It is hard to satisfy different stakeholders, so talk to them about what they really need from you. Also see question 7.

I prefer to talk to people over sending documents, that is an interactive way of learning if my thoughts match the other persons thoughts. It may sound strange, but I meet a lot of people who think talking to others costs too much time or is hard to organise. They prefer to write stuff down. Which is okay too, but at one point we need to talk about it to gain deeper insight. Also: don’t wait too long to talk with others. Don’t try to be complete or perfect, build in fast feedback loops in building your test strategy.

I like to use models/visualisations of my thoughts to make conversation easier. I created workshops on thinking and working visualy with Jean-Paul Varwijk and later with Ruud Cox. Later I learned about storytelling and how to deal with complexity. I think the complexity of what we have to deal with (complexity of the products we are building but also the complexity of working with people: communication and understanding each other is hard!).

See question 15, question 32 and question 33.