Day 19 already! I hope you can all appreciate how much we have covered already - well done!

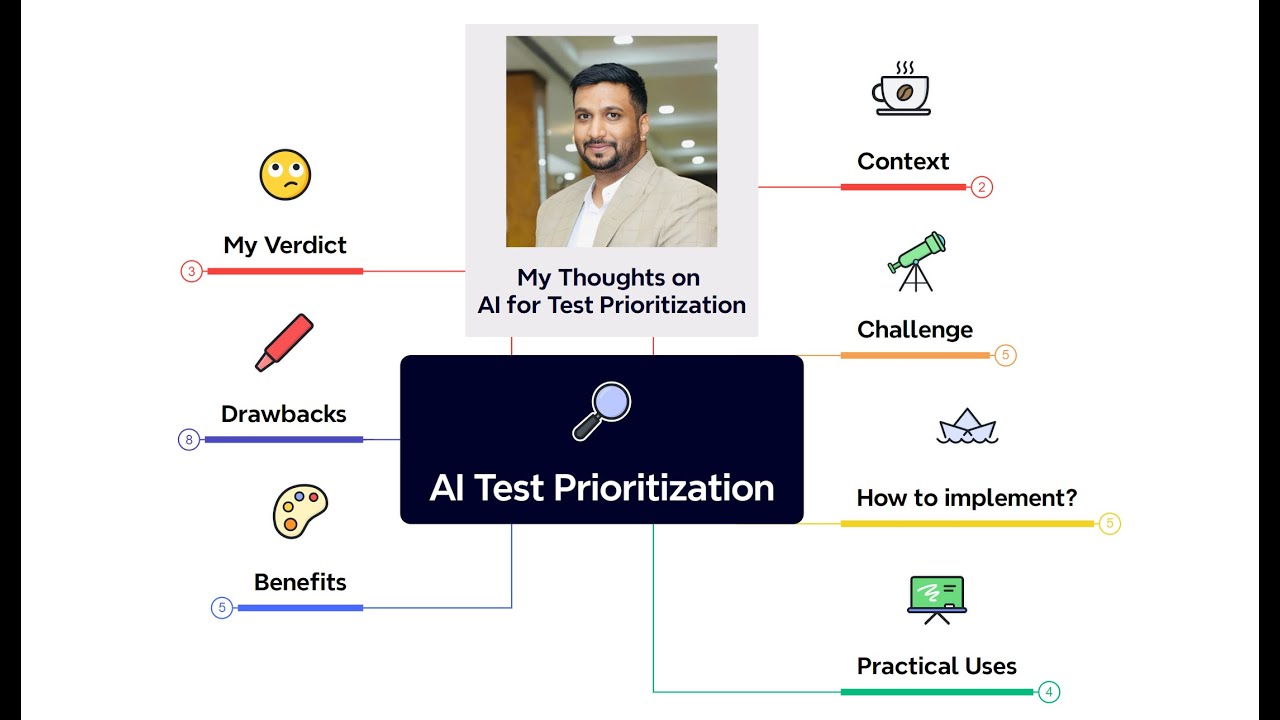

Today we want to turn our attention to whether AI can help us make decisions about test selection and prioritisation and evaluate some of the risks and benefits of this as an approach.

Using data to make decisions about what to test and how much has been around for a long time (most testers are familiar with the idea of Risk Based Testing) and it’s natural to think about automating these decisions to accelerate. The technical evolution for this process it to delegate to an AI model that learns from data in your context about the testing performed and the observable impact of the testing.

The critical question is…Should we?

Task Steps

You have two options for today’s task (you can do both if you want):

- Option 1 - If your company already uses an AI powered tool for test prioritisation and selection, then write a short case study and share it with the community by responding to this post. Consider sharing:

- The tool you are using

- How does the tool select/prioritise tests? Is this understandable to you?

- How does your team use the tool? For example, only for automated checks or only for Regression?

- Has the performance of the tool improved over time?

- What are the key benefits your team gains by using this tool?

- Have there been any notable instances where the tool was wrong?

- Option 2 - Consider and evaluate the idea of using AI to select and prioritise your testing.

- Find and read a short article that discusses the use of AI in test prioritisation and selection.

- Tip: if you are short on time, why not ask your favourite chatbot or copilot to summarise the current approaches and benefits of using AI in test prioritisation and selection?

- Consider how you or your team currently perform this task. Some thinking prompts are:

- To what extent do you need to select/prioritise tests in your context?

- What factors do you use when selecting/prioritising tests? Are they qualitative or quantitative?

- How do you make decisions when there is a lack of data?

- What is the implication if you get this wrong?

- In your context, would delegating this task to an AI be valuable? If so, how would your team benefit?

- What are the risks of delegating test prioritisation and selection to an AI model? Some thinking prompts are:

- How might test prioritisation and selection fail, and what would the impact be?

- Do you need to understand and explain the decision made by the AI?

- “How did test/qa miss this?” is an unjust but common complaint - how does this change if an AI is making the decisions about what to test?

- How could you mitigate these?

- If we mitigate risks using a Human in the loop, how does this impact the benefits of using AI?

- How could you fairly evaluate the performance of an AI tool in this task?

- Share your key insights by replying to this post. Consider sharing:

- A brief overview of your context (e.g. what industry you work in or the type of applications you test).

- Share your key insights about the benefits and risks of adopting AI for test prioritisation and selection.

- Find and read a short article that discusses the use of AI in test prioritisation and selection.

Why Take Part

- Understanding where AI can help: There is excitement/hype about using AI to improve and accelerate testing. For teams managing large numbers tests, complex systems or time-consuming tests being more data driven about selecting and prioritising tests might provide real benefits. By taking part in today’s task, you are critically evaluating whether it works for your context, you learn about specific risks of delegating responsibility to AI and are better prepared to make a considered decision about AI based Testing Selection and Prioritisaiton.