It’s Day 17! Today, we’re going to explore the potential of using AI to automate bug detection and reporting processes.

As testers, we know that efficient bug reporting is important for effective communication and collaboration with our teams. However, this process can be time-consuming and error-prone, especially when dealing with complex applications or large test suites. AI-powered bug reporting tools promise to streamline this process by automatically detecting and reporting defects, potentially saving time and improving accuracy.

However, like any AI technology, it’s important to critically evaluate the effectiveness and potential risks of using AI for bug reporting. In today’s task, we’ll experiment with an AI tool for bug detection and reporting and assessing its quality.

Task Steps

-

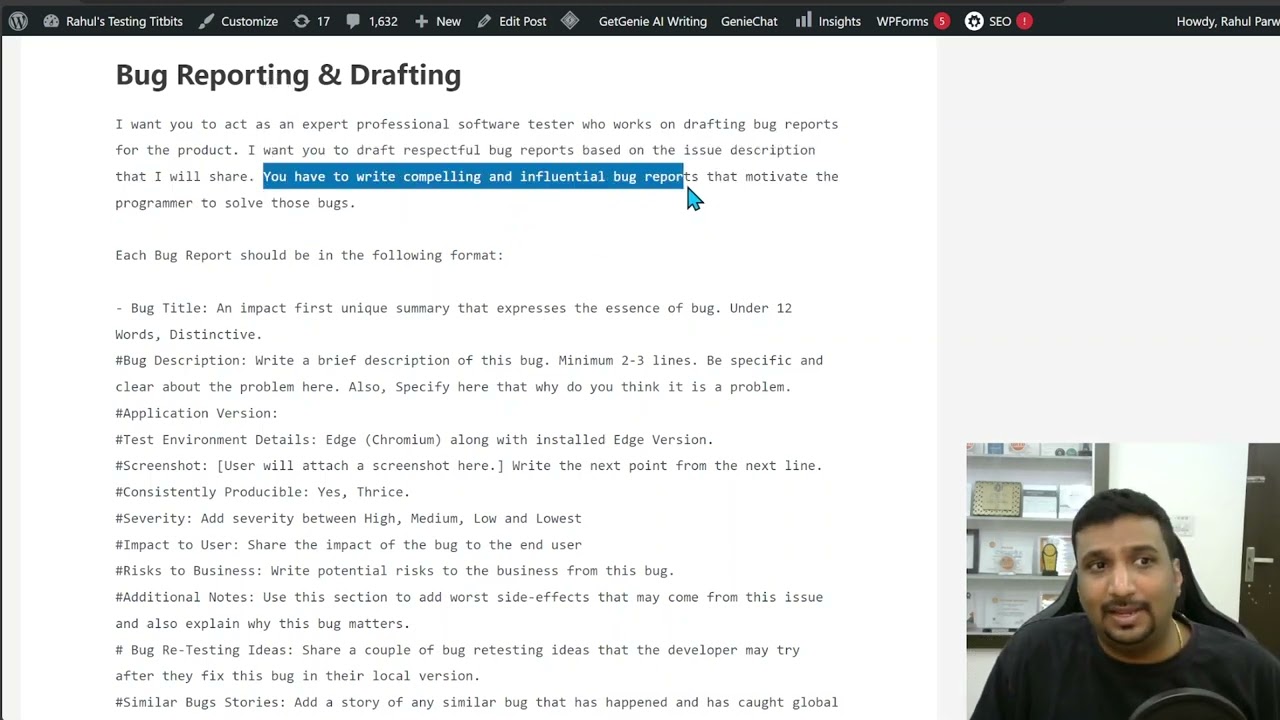

Experiment with AI for Bug Reporting: Choose an AI bug detection and reporting tool or platform. Earlier in this challenge, we created lists of tools and their features, so review those posts or conduct your own research. Many free or trial versions are available online. Explore the tool’s functionalities and experiment with it on a sample application or project.

-

Evaluate the Reporting Quality: Assess the accuracy, completeness and quality of the bug reports generated by AI. Consider:

- Are the bugs identified by the AI valid issues?

- Are the AI-generated reports detailed, clear and actionable enough?

- How does the quality of information compare to manually created bug reports?

-

Identify Risks and Limitations: Reflect on the potential risks associated with automating bug reporting with AI:

- False Positives: How likely is the AI to flag non-existent issues?

- False Negatives: Can the AI miss critical bugs altogether?

- Bias: Could the AI be biased towards certain types of bugs or code structures?

-

Data Usage and Protection: Investigate how the AI tool utilises your defect data to generate reports. Consider these questions:

- Data Anonymisation: Is your data anonymised before being used by the AI?

- Data Security: How is your data secured within the tool?

- Data Ownership: Who owns the data collected by the AI tool?

-

Share Your Findings: Summarise your experience in this post. Consider including:

- The AI tool you used and your experience with its functionalities

- Your assessment of the quality of the bug reports

- The risks and limitations you identified

- Your perspective on data usage and potential data protection issues

- Your overall evaluation of AI’s potential for automating bug reporting, consider:

- How did it compare with your traditional bug reporting methods?

- Did it identify any bugs you might have missed?

- How did it impact the overall efficiency of your bug-reporting process?

Why Take Part

- Explore Efficiency Gains: Discover how AI can enhance the bug reporting process, potentially saving time and improving report quality.

- Understand AI Limitations: By critically evaluating AI tools for bug reporting, you’ll gain insights into their current capabilities and limitations, helping to set realistic expectations.

- Enhance Testing Practices: Sharing your findings contributes to our collective understanding of AI’s role and potential in automating bug detection and reporting.