It’s Day 18! Throughout our 30 Days of AI in Testing journey, we’ve explored various applications of AI across different testing activities. While AI’s potential is undoubtedly exciting, we cannot ignore the personal frustrations that may have arisen as you experimented with these new technologies.

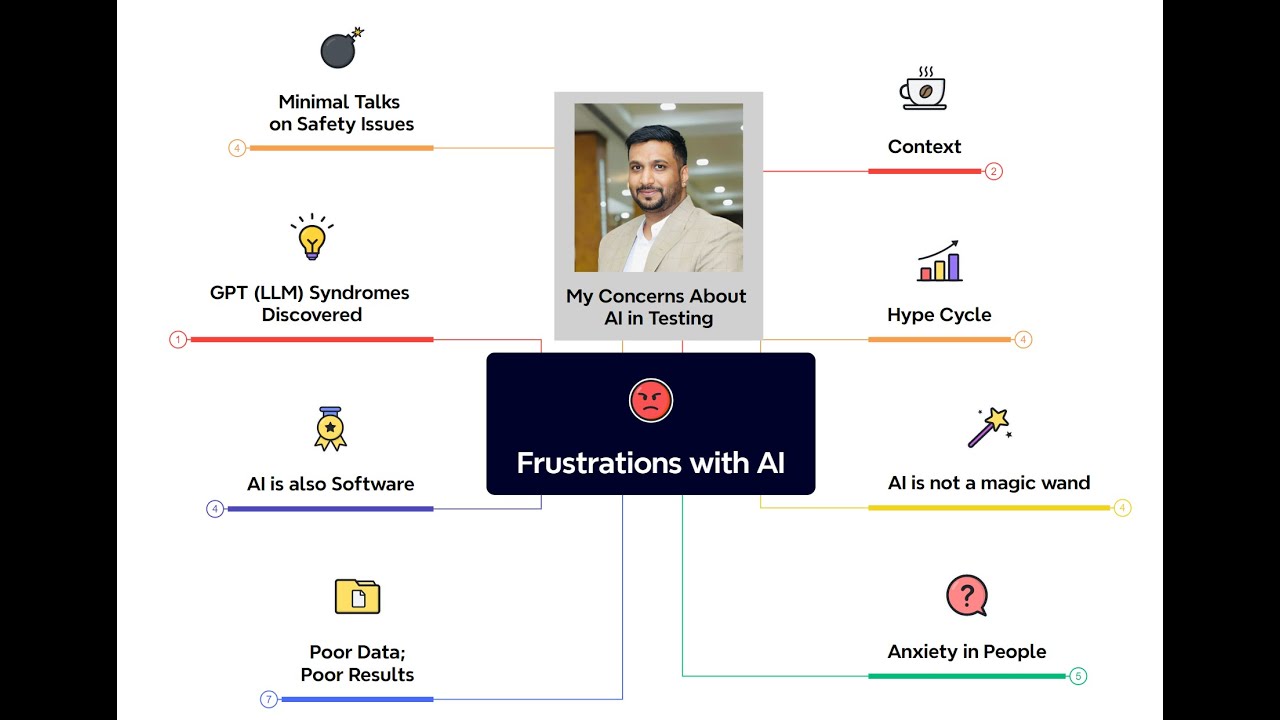

Today’s task provides an opportunity to share your personal frustrations or concerns you’ve encountered while working with AI during this challenge. By openly discussing these individual experiences, we can get a deeper understanding of the potential pitfalls and identify areas for improvement with AI technologies.

Task Steps

-

Identify Your Frustration: Think back to your experiences throughout the challenge. What aspect of AI in testing caused you the most frustration or concern? Here are some prompts to get you started:

- Limited Functionality: Did you find that the AI tools lacked the capabilities you were hoping for in specific testing areas (e.g., usability testing, security testing)?

- The Black Box Conundrum: Were you frustrated by the lack of transparency in some AI tools? Did it make it difficult to trust their results or learn from them?

- The Learning Curve Struggle: Did the complexity of some AI tools or the rapid pace of AI development leave you feeling overwhelmed?

- Bias in the Machine: Did you have concerns about potential bias in AI algorithms impacting the testing process (e.g., missing bugs affecting certain user demographics)?

- Data Privacy Worries: Are you uncomfortable with how AI tools might use or store your testing data? Do you have concerns about data security or anonymisation practices?

- The Job Security Conundrum: Do you worry that AI might automate testing tasks and make your job redundant?

Feel free to add your own frustration if the above prompts don’t resonate with you!

- Explain Your Perspective: Once you’ve identified your frustration, elaborate on why it’s a significant issue for you in reply to this post. Does it relate to your experience working with AI in testing?

- Bonus - Learn from Shared Experiences: Engaging with the personal experiences shared by others can provide valuable insights and potentially shed light on challenges or frustrations you may not have considered. Like or reply to those who have broadened your perspective

Why Take Part

- Identify Areas for Improvement: By openly discussing our frustrations with AI in testing, we can foster open communication and a more balanced approach to its implementation and development. As well as identify areas where AI tools, techniques, or practices need further refinement or improvement.