We’ve reached Day 30 of our 30 Days of AI in Testing Challenge!! ![]() Bug congrats for participating on any day throughout this challenge!

Bug congrats for participating on any day throughout this challenge! ![]() All contributions make a difference and add to the value of this month-long initiative, so thank you for getting involved in whatever way you have.

All contributions make a difference and add to the value of this month-long initiative, so thank you for getting involved in whatever way you have. ![]()

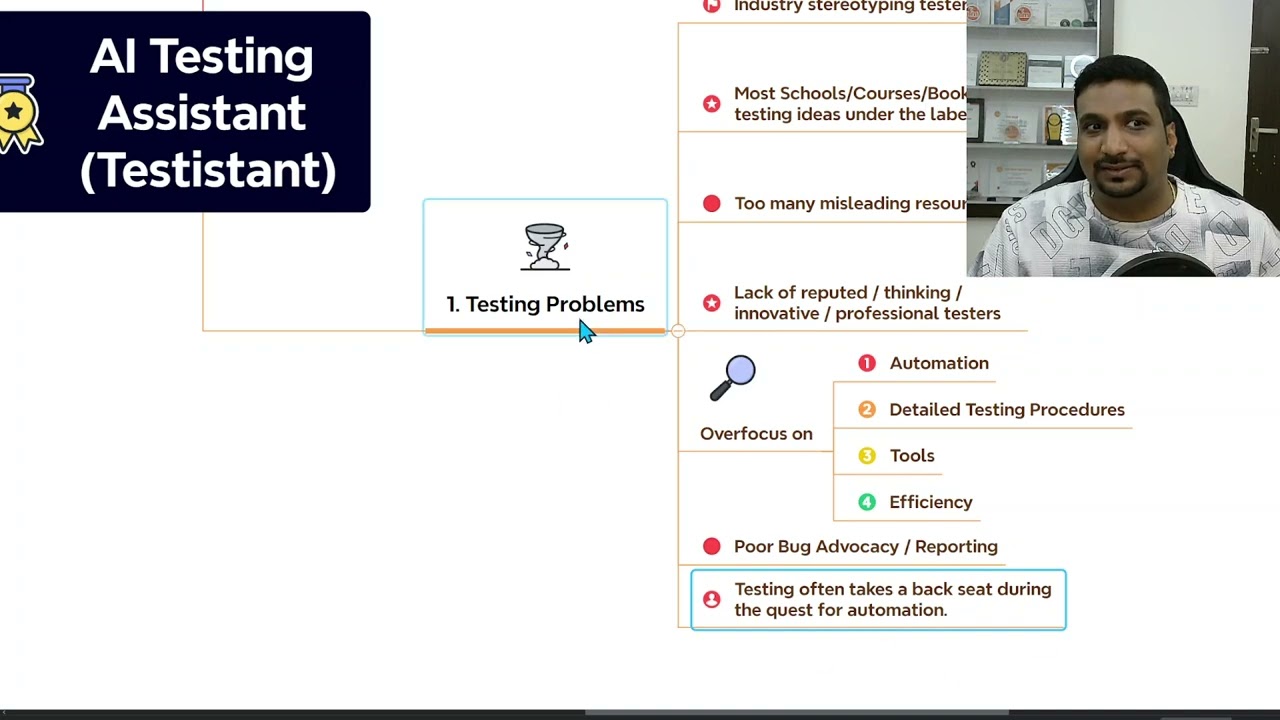

Today, we invite you to dream big and envision the ultimate AI companion for your testing adventures. Imagine an AI assistant tailored perfectly to your needs, enhancing your testing processes and acting as your right-hand entity in navigating the complexities of software testing.

Today’s Task:

Design your ideal AI testing assistant. Think about the functionalities, attributes, and interactions that would make this AI companion invaluable to your daily testing activities.

Task Steps:

- Envision the Perfect Assistant: Reflect on your daily testing routines and identify areas where an AI could offer support. What features and capabilities would make an AI assistant truly effective for your needs?

- Design the Persona and Interface: Get creative with how your AI Test Buddy would present itself. What would its persona be? How would it communicate with you, and through what interface?

- Outline Key Functionalities and Limitations: Detail the tasks your AI assistant would excel at. Could it automate mundane tasks, generate test cases, or provide real-time insights? Equally important, acknowledge what it wouldn’t do.

- Share Your Vision: Bring your AI Test Buddy to life by sharing your concept by replying to this post. Feel free to include sketches or a detailed description. Paint a picture of how this assistant would integrate into your workflow, improve productivity, and enhance your approach to testing.

Why Take Part

- Inspire Innovation: Set a vision for future tools and inspire potential tool developers with what’s truly desired in the field.

- Anticipate the Future: This task encourages you to think ahead about the evolving role of AI in testing, potentially preparing you to embrace upcoming advancements.